Experiments

In our "Lucid Dream with Body Rhythm" project, Kyuwon, Yerin, and I conducted five experiments to explore the intersection of body rhythms and dream states, particularly focusing on the concept of lucid dreaming.

By using real-time body rhythm data—such as breath, pulse, and blinking patterns—we created various visual and sensory experiences that reflect the influence of these physiological processes on dream perception.

1. Body Rhythm

Experiment One.

Body Rhythm Data to Visual Art

We collected data like breathing and heart rate from participants and transformed them into generative visual art using p5.js. This experiment examines how bodily rhythms during sleep can shape visual representations of dreams. The visuals were then compiled into posters and publications, capturing the rhythmic patterns as dream imagery.

2. Lucid Dream Simulator

Experiment Two.

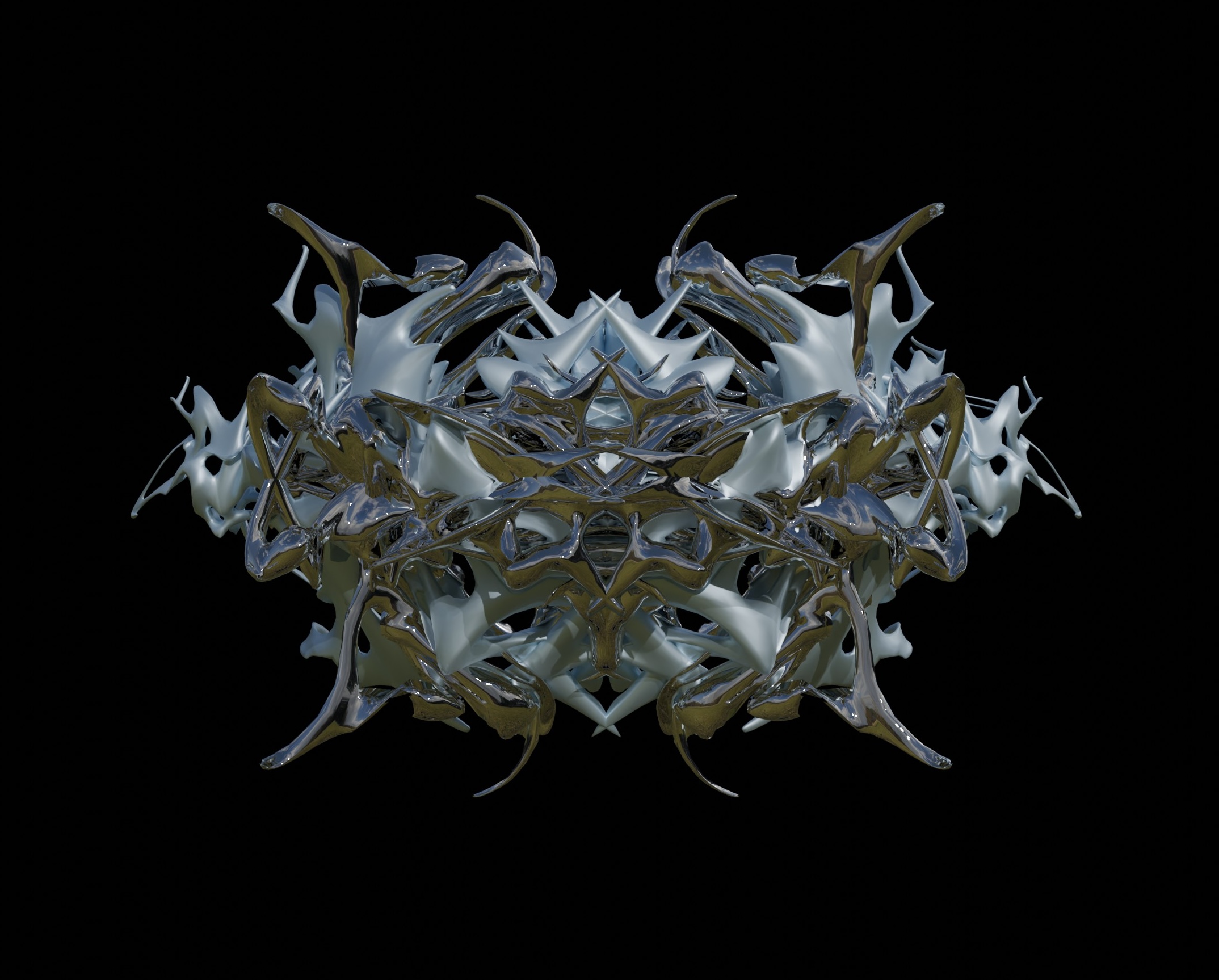

Simulating Lucid Dream with Arduino and Blender

Using Arduino to simulate the Lucid Drean, we created surreal 3D element in Blender. A potentiometer allows users to adjust the form of the "dream," with curved shapes symbolizing positive dreams and sharp angles representing nightmares, reflecting the control aspect of lucid dreaming.

3. 몽롱하다(Haziness)

Experiment Three.

Body Rhythm Data to Visual Art

We incorporated the Korean concept of '몽롱하다,' meaning hazy or dazed, to visualize dreamlike states in p5.js using body rhythm data. These visuals evoke the blurred, ambiguous feeling associated with dream states.

4. Surreal Dynamics

Experiment Four.

TouchDesigner

We used TouchDesigner to create dynamic grid visuals integrating the theme of body and lucid dream. In this experiment, we created sticker design with the visuals made from using various nods.

5. Spaces

Experiment Five.

Speech Recognition

In our final experiment, we combined speech recognition with a multi-layered process: speech data is converted into 3D shapes, which are then integrated into TouchDesigner and further processed with p5.js. The printed visual sound score maps the corresponding actions triggered by voice commands, serving as a guide for interaction with users.